In this post I demonstrate a simple container deployment setup; a Jenkins pipeline to Elastic Container Registry (ECR) and Fargate on Elastic Container Service (ECS). I assume you have Jenkins running, with a pipeline and Git repo webhook tied to it. Besides the default Jenkins plugins, you’ll need the Pipeline Utiliy Steps.

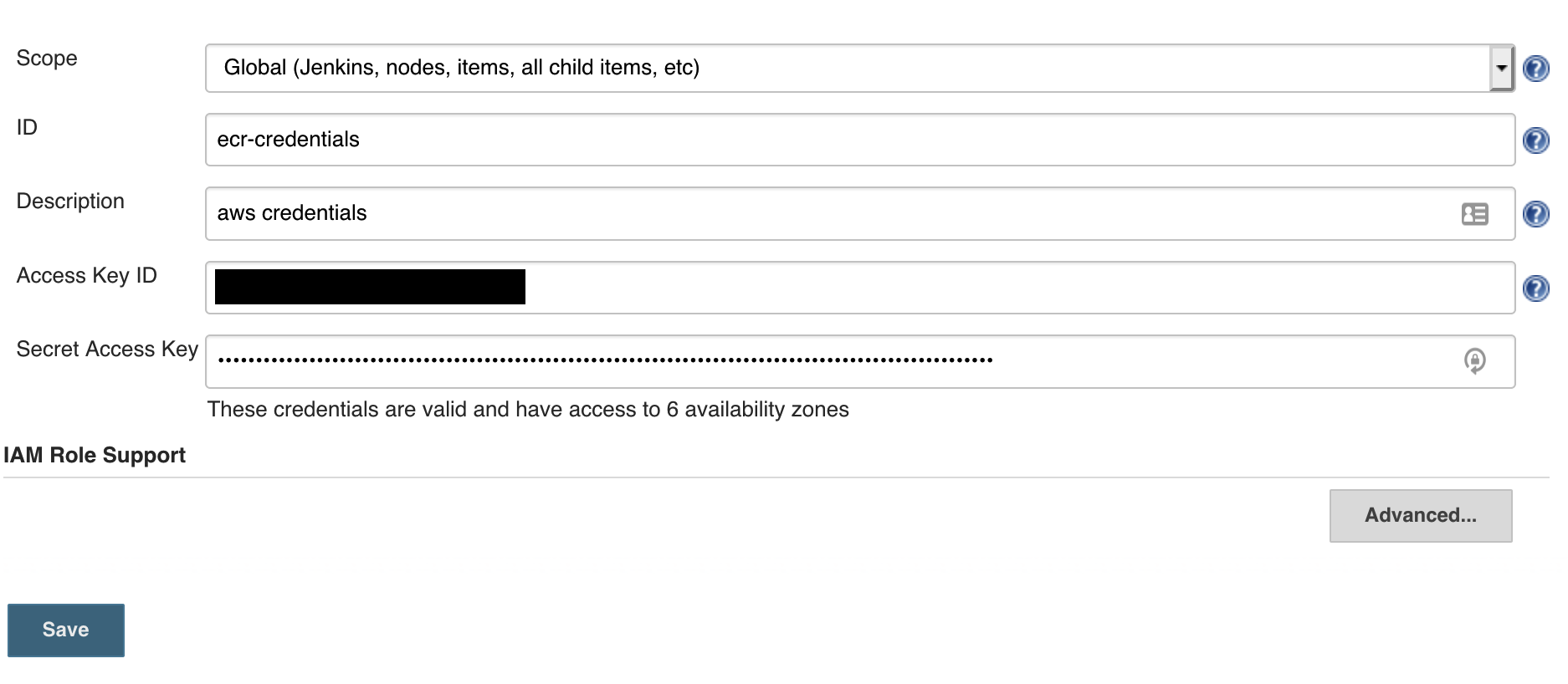

Also I assume you already have a ECR repository, a ECS Fargate cluster and an AWS service account with credentials. I decided not to use the AWS credentials plugin since it is too implicit. So instead, set regular username & password:

Put the following taskdef_template.json and Jenkinsfile in the top directory of your project:

the taskdef_template.json file:

{

"family": "%TASK_FAMILY%",

"networkMode": "awsvpc",

"executionRoleArn": "%EXECUTION_ROLE_ARN%",

"containerDefinitions": [

{

"image": "%REPOSITORY_URI%:%SHORT_COMMIT%",

"name": "%SERVICE_NAME%",

"cpu": 10,

"memory": 256,

"essential": true,

"portMappings": [

{

"containerPort": 80,

"hostPort": 80

}

]

}

],

"requiresCompatibilities": ["FARGATE"],

"cpu": "256",

"memory": "512"

}

and the Jenkinsfile:

@Library('github.com/releaseworks/jenkinslib') _

pipeline {

environment {

REPOSITORY_URI = 'xxxxxxxxxxxx.dkr.ecr.eu-west-1.amazonaws.com/container-app-demo'

SERVICE_NAME = 'container-app-demo'

TASK_FAMILY="container-app-demo" // at least one container needs to have the same name as the task definition

DESIRED_COUNT="1"

CLUSTER_NAME = "my-ECS-cluster"

SHORT_COMMIT = "${GIT_COMMIT[0..7]}"

AWS_ID = credentials("aws-key")

AWS_ACCESS_KEY_ID = "${env.AWS_ID_USR}"

AWS_SECRET_ACCESS_KEY = "${env.AWS_ID_PSW}"

AWS_DEFAULT_REGION = "eu-west-1"

EXECUTION_ROLE_ARN = "arn:aws:iam::xxxxxxxxxxxx:role/ecsTaskExecutionRole"

}

agent any

stages {

stage('Build image') {

steps {

script {

// Build image in the top directory

def dockerImage = docker.build("$REPOSITORY_URI", ".")

}

}

}

stage('Test') {

steps {

echo 'Testing..'

}

}

stage('Publish Image to ECR') {

steps{

script {

withElasticContainerRegistry {

// Push to ECR

docker.image("$REPOSITORY_URI").push("$SHORT_COMMIT")

}

}

}

}

stage('Deploy Image to ECS') {

steps{

// prepare task definition file

sh """sed -e "s;%REPOSITORY_URI%;${REPOSITORY_URI};g" -e "s;%SHORT_COMMIT%;${SHORT_COMMIT};g" -e "s;%TASK_FAMILY%;${TASK_FAMILY};g" -e "s;%SERVICE_NAME%;${SERVICE_NAME};g" -e "s;%EXECUTION_ROLE_ARN%;${EXECUTION_ROLE_ARN};g" taskdef_template.json > taskdef_${SHORT_COMMIT}.json"""

script {

// Register task definition

AWS("ecs register-task-definition --output json --cli-input-json file://${WORKSPACE}/taskdef_${SHORT_COMMIT}.json > ${env.WORKSPACE}/temp.json")

def projects = readJSON file: "${env.WORKSPACE}/temp.json"

def TASK_REVISION = projects.taskDefinition.revision

// update service

AWS("ecs update-service --cluster ${CLUSTER_NAME} --service ${SERVICE_NAME} --task-definition ${TASK_FAMILY}:${TASK_REVISION} --desired-count ${DESIRED_COUNT}")

}

}

}

stage('Remove docker image') {

steps{

// Remove images

sh "docker rmi $REPOSITORY_URI"

}

}

}

}

Replace all the top values based on your own. The steps in the Jenkinsfile are quite straightforward:

- build the container based on the

Dockerfile(you can change the path optionally); - run some tests;

- Publish the image to the ECR repo;

- Create a new task definition with this new image and update the ECR service with this task definition.